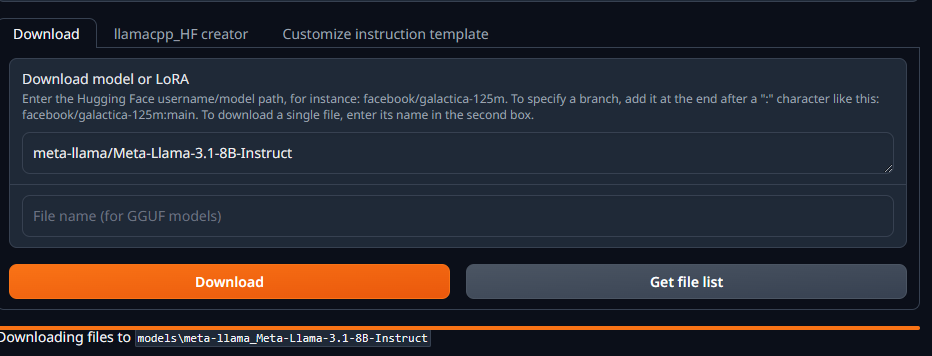

If you are using Text Gen Web UI and facing the error “403 Client Error: Forbidden for url”, worry not in this article we will tell you how to fix the issue quickly. This error occurs when you try to download the protected AI models to your PC, for eg. I was trying to download the latest Llama 3.1 8B model via Text Gen Web UI downloader and it showed the following screen:

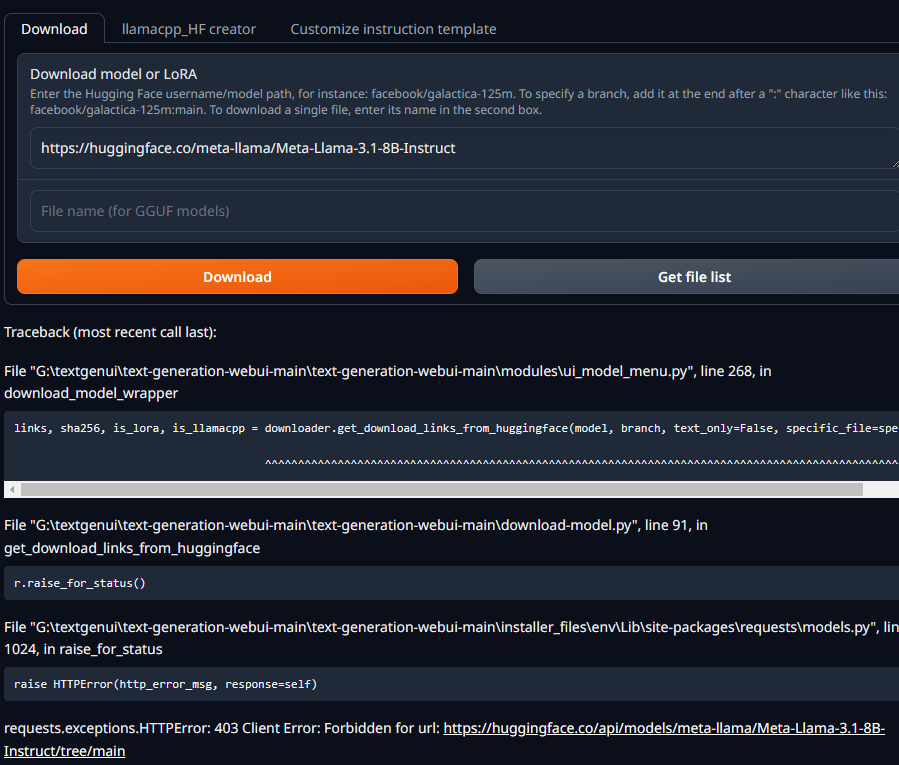

Traceback (most recent call last):

File "G:\textgenui\text-generation-webui-main\text-generation-webui-main\modules\ui_model_menu.py", line 268, in download_model_wrapper

links, sha256, is_lora, is_llamacpp = downloader.get_download_links_from_huggingface(model, branch, text_only=False, specific_file=specific_file) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "G:\textgenui\text-generation-webui-main\text-generation-webui-main\download-model.py", line 91, in get_download_links_from_huggingface

r.raise_for_status()

File "G:\textgenui\text-generation-webui-main\text-generation-webui-main\installer_files\env\Lib\site-packages\requests\models.py", line 1024, in raise_for_status

raise HTTPError(http_error_msg, response=self)

requests.exceptions.HTTPError: 403 Client Error: Forbidden for url: https://huggingface.co/api/models/meta-llama/Meta-Llama-3.1-8B-Instruct/tree/main

How to Fix the 403 Client Error: Forbidden for url

I spent quite sometime before on this too, it was just easy to search the Huggingface repo for duplicate models that didn’t require authentication, so If you are someone who do not want to use your account to authenticate you can do the same. This time I wanted to fix it for good as the frequency at which companies are releasing models is just is too quick. The documentation to fix such issue was non existent.

Steps to allow authentication with your account:

- Register at Huggingface and visit the following page to generate access token https://huggingface.co/settings/tokens

- Open local Text Gen Web UI folder and edit the file one_click.py

Add the following, at line 11:

import argparse

import glob

import hashlib

import os

import platform

import re

import signal

import site

import subprocess

import sys

os.environ['HF_TOKEN'] = "hf_dso345345N43dfgsdNLWf3452zad4dsf"Replace the token above with the token generated from step 1.

Make sure to restart the start_windows.bat

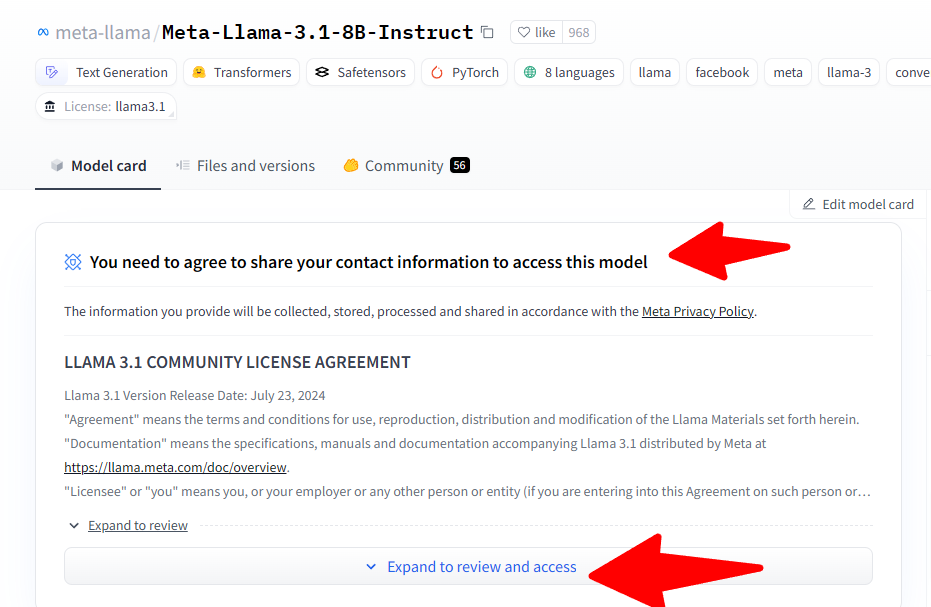

That’s it. Now you can download the latest AI models from Meta and Google official repos as soon as they become available. For Meta models you also need to Agree to share details and they take some time to approve.

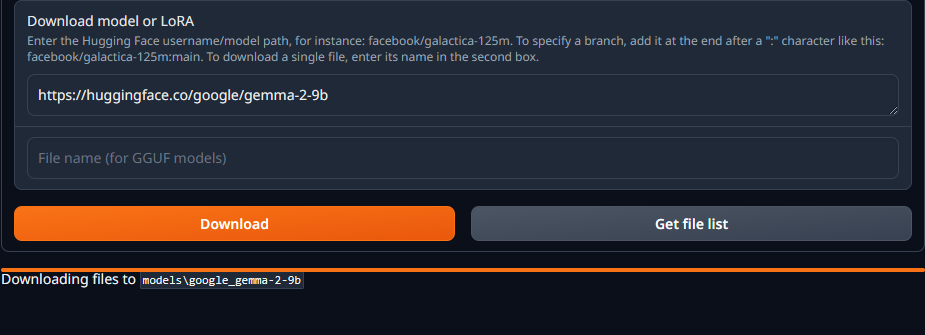

To quickly test if authentication via token is working correctly, use the following models

https://huggingface.co/google/gemma-2-9b

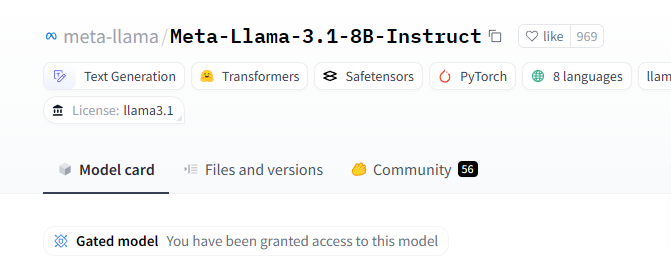

Once you have been granted access to the meta model, it will look like this:

Now you can start the download without any issues.