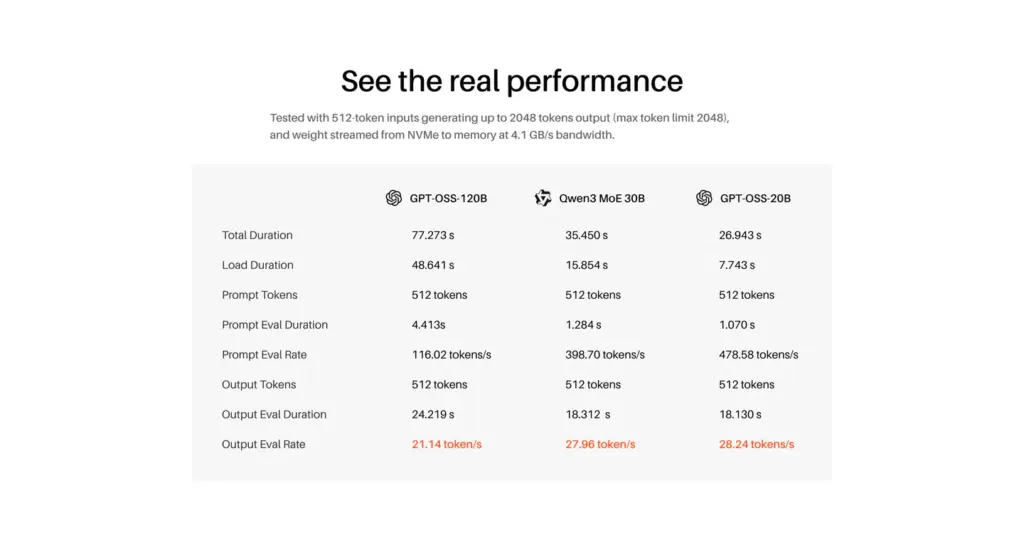

Tiiny a new company building something different in the LocalLLM space has unveiled a pocket size mini LLM computer that will run the 120B GPT-OSS LLM at a generous speed of 21 tok/s while consuming only 30-65 watts of power. So, how did they cram all that power into such a tiny package? The secret sauce lies in two key innovations: TurboSparse, a clever technique that squeezes out maximum efficiency without sacrificing the AI’s “intelligence,” and PowerInfer, an open-source engine that smartly divides the workload between the device’s processor and its dedicated AI chip. Together, they deliver server-grade performance at a fraction of the cost and energy. With specs like a 12-core Arm processor, 80GB of DDR5 RAM, and 1TB of NVME storage, all weighing just over 300g, this tiny thing is just what local LLM enthusiasts needs and craves for.

Tiiny AI is set to showcase the entire ecosystem, including one-click installs for popular AI models, at CES in January 2026. Pricing information would be released later. Since it do not come with a dedicated GPU, it will interesting to see it will get priced in normal circumstances.

Check out real world LLM perf numbers of the Tiiny LLM pocket computer:

More info on the Tiiny hardware:

The 80GB GDDR5 RAM the device comes with is shared with the powerful NPU with 160TOPS, the NPU gets 48GB Dedicated while the remaining 32GB RAM is addressed by the CPU as 30 TOPS: totaling 190TOPS.

Lets just hope that the memory pricing returns to normal next year otherwise even devices like this one will be out of reach for many enthusiasts.

Checkout the Manufacturer: Tiiny