Now you can generate Text to Music locally with a new Ace-Step a SOTA, Apache licensed foundation model which was just released to public, it is kind of similar to Suno model but you can now run this on consumer hardware, anything above 8GB VRAM with swap will work, for best experience a minimum 16GB VRAM is recommended. The developers of ACE-Step want to create “Stable Diffusion moment” for music—a flexible, powerful platform that democratizes music creation and fuels the next wave of artistic innovation.

By combining diffusion-based generation with Sana’s Deep Compression AutoEncoder (DCAE) and a lightweight linear transformer, ACE-Step delivers a breakthrough in music synthesis. Integrated with MERT and m-hubert for semantic alignment (REPA) during training, it achieves rapid convergence, synthesizing up to 4 minutes of music in just 20 seconds on an A100 GPU. That’s 15× faster than LLM-based baselines, with superior coherence and alignment across melody, harmony, rhythm, and lyrics.

ACE-Step doesn’t just stop at speed. It preserves intricate acoustic details, enabling advanced creative controls like voice cloning, lyric editing, remixing, and track generation (e.g., lyric2vocal, singing2accompaniment). Rather than creating another rigid text-to-music pipeline, our goal is to build a versatile foundation model for music AI—one that’s fast, efficient, and adaptable. This architecture empowers developers to train specialized sub-tasks, paving the way for tools that integrate seamlessly into the workflows of artists, producers, and creators.

How to generate Songs locally with Text to Music with Ace Step:

# Installation Guide for ACE-Step

## Prerequisites

# Ensure Python is installed. Download from https://python.org if needed.

# Required: Python 3.10

# Recommended: Use Conda or venv for virtual environment management.

## Environment Setup

# It’s strongly advised to use a virtual environment to manage dependencies and avoid conflicts.

# Choose one of the following methods: Conda or venv.

### Option 1: Using Conda

# Create a Conda environment named 'ace_step' with Python 3.10

conda create -n ace_step python=3.10 -y

# Activate the environment

conda activate ace_step

### Option 2: Using venv

# Ensure you're using Python 3.10

# Create a virtual environment named 'venv'

python -m venv venv

# Activate the environment

# On Windows (cmd.exe):

venv\Scripts\activate.bat

# On Windows (PowerShell):

# If you face execution policy errors, run this first:

# Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope Process

.\venv\Scripts\Activate.ps1

# On Linux/macOS (bash/zsh):

source venv/bin/activate

## Install Dependencies

# Install dependencies from requirements.txt

# For macOS/Linux users:

pip install -r requirements.txt

# For Windows users:

# Install PyTorch, TorchAudio, and TorchVision (adjust CUDA version as needed, e.g., cu126)

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu126

# Then install remaining dependencies

pip install -r requirements.txt

#To Run

python app.py

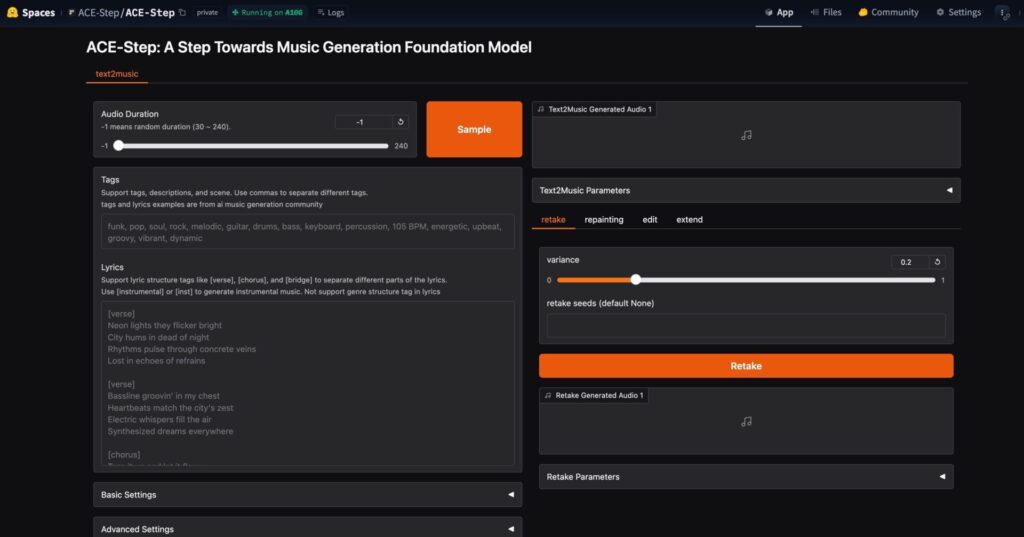

You will be greeted with the following user interface to generate local music

Check out the generated various songs at the official link below:

Currently a free to use hosted Ace Step is available on huggingface if you want to check out the capabilities of the T2M model quickly.