Running AI models on localhost as an API that can feed to other programs is a great way to leverage local resources for text generation tasks. For eg. if you wish to use the API in VS Code for code completion or generation via plugins. Normally people use the Ollama installation for the API but if you have already got the TextGen Web UI installed on your PC then there is no need to install Ollama, here is how to get started:

TextGen Web UI as API In Windows 10/11

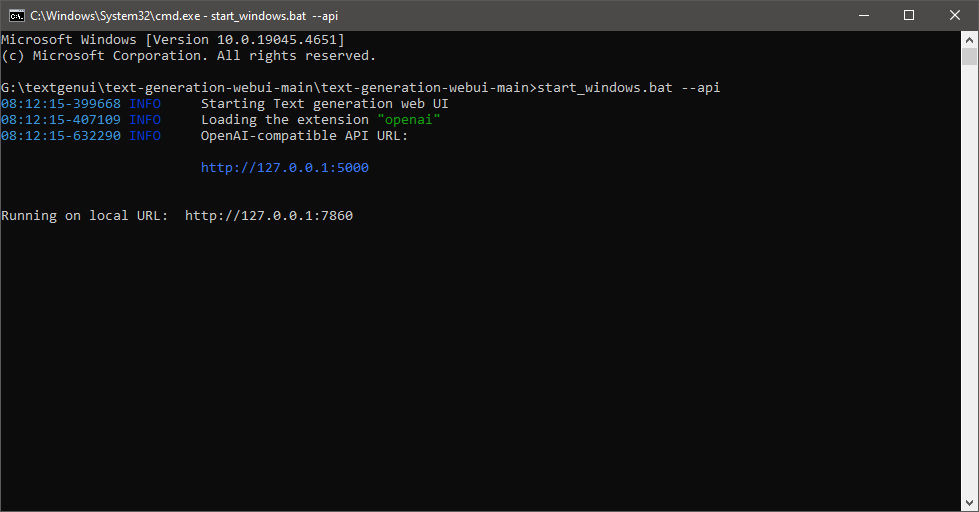

Go Inside the working directory where the start_windows.bat file is present and execute the following part that is in bold. Type cmd in the folder address bar and type enter and then use the following:

G:\textgenui\text-generation-webui-main\text-generation-webui-main>start_windows.bat --apiHere is how it will look and it will output the API URL

08:12:15-399668 INFO Starting Text generation web UI

08:12:15-407109 INFO Loading the extension "openai"

08:12:15-632290 INFO OpenAI-compatible API URL:

http://127.0.0.1:5000

Running on local URL: http://127.0.0.1:7860

Now load your favorite AI model normally via the TextGen Web UI interface on port 7860 and visit http://127.0.0.1:5000 to see the API working.

TextGen Web UI as API In Linux

Similarly in Linux use

start_linux.sh --api

Access the API

You can now access the API endpoints. For example, to generate text completions, you can use the following curl command:

curl http://127.0.0.1:5000/v1/completions \

-H "Content-Type: application/json" \

-d '{

"prompt": "This is a cake recipe:\n\n1.",

"max_tokens": 500,

"temperature": 1,

"top_p": 0.9,

"seed": 10

}'How to Test if the local API has started and is working:

Visit the following URL

http://127.0.0.1:5000/docsIf you see an output like below then your API is working and is successfully started.